UCB: AIsys Catalogue

MLsys Course Description

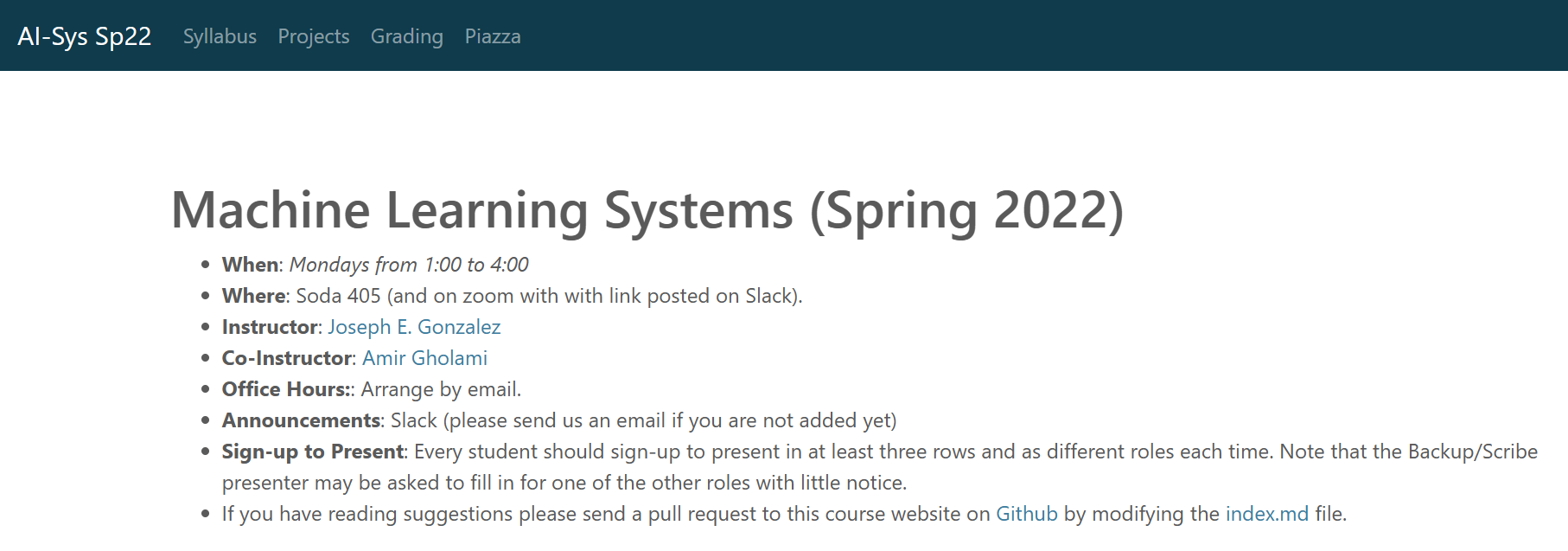

The recent success of AI has been in large part due in part to advances in hardware and software systems. These systems have enabled training increasingly complex models on ever larger datasets. In the process, these systems have also simplified model development, enabling the rapid growth in the machine learning community. These new hardware and software systems include a new generation of GPUs and hardware accelerators (e.g., TPU), open source frameworks such as Theano, TensorFlow, PyTorch, MXNet, Apache Spark, Clipper, Horovod, and Ray, and a myriad of systems deployed internally at companies just to name a few. At the same time, we are witnessing a flurry of ML/RL applications to improve hardware and system designs, job scheduling, program synthesis, and circuit layouts.

In this course, we will describe the latest trends in systems designs to better support the next generation of AI applications, and applications of AI to optimize the architecture and the performance of systems. The format of this course will be a mix of lectures, seminar-style discussions, and student presentations. Students will be responsible for paper readings, and completing a hands-on project. For projects, we will strongly encourage teams that contains both AI and systems students.

Paper List

1. Introduction and Course Overview

Require Reading

✅ SysML: The New Frontier of Machine Learning Systems Read Chapter 1 of Principles of Computer System Design. You will need to be on campus or use the Library VPN to obtain a free PDF. ✅ A Few Useful Things to Know About Machine Learning

Additional Optional Reading

✅ How to read a paper provides some pretty good advice on how to read papers effectively. ✅ Timothy Roscoe’s writing reviews for systems conferences will also help you in the reviewing process.

2. Big Data Systems

Require Reading

✅ Towards a Unified Architecture for in-RDBMS Analytics ✅ Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing ✅ Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics

Additional Optional Reading

✅ Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores ✅ Spark SQL: Relational Data Processing in Spark 🔲 The MADlib Analytics Library or MAD Skills, the SQL

3. Hardware for Machine Learning

Require Reading

✅ [Mixed precision training. ICLR’18] ✅ [Eyeriss: A Spatial Architecture for Energy-Efficient Dataflow for Convolutional Neural Networks SIGRAPH, 2016] ✅ Interstellar: Using Halide’s Scheduling Language to Analyze DNN Accelerators (formerly: DNN Dataflow Choice Is Overrated) ✅ [Gemmini: Enabling Systematic Deep-Learning Architecture Evaluation via Full-Stack Integration Best Paper Award, DAC’21]

Additional Optional Reading

✅ A New Golden Age for Computer Architecture 🔲 Roofline: An Insightful Visual Performance Model for Floating-Point Programs and Multicore Architectures

4. Distributed deep learning, Part I: Systems

Require Reading

🔲 [Chimera: Efficiently Training Large-Scale Neural Networks with Bidirectional Pipelines SC’21, Best Paper finalist] 🔲 [Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM SC’21, Best Student Paper] 🔲 [ZeRO-Infinity: Breaking the GPU Memory Wall for Extreme Scale Deep Learning SC’21]

Additional Optional Reading

🔲 [DeepSpeed: Advancing MoE inference and training to power next-generation AI scale Blog post] 🔲 [Large Scale Distributed Deep Networks NeurIPS’12] 🔲 [Gpipe: Efficient training of giant neural networks using pipeline parallelism NeurIPS’19] 🔲 [PipeDream: Fast and Efficient Pipeline Parallel DNN Training SOSP’19]

5. Distributed deep learning, Part II: Scaling Constraints

Require Reading

🔲 Measuring the Effects of Data Parallelism on Neural Network Training 🔲 [Scaling Laws for Neural Language Models OpenAI, 2020] 🔲 Deep Learning Training in Facebook Data Centers: Design of Scale-up and Scale-out Systems

Additional Optional Reading

🔲 [On Large-Batch Training for Deep Learning: Generalization Gap and Sharp Minima ICLR’16] 🔲 [Train Large, Then Compress: Rethinking Model Size for Efficient Training and Inference of Transformers ICML’20] 🔲 [Large Batch Optimization for Deep Learning: Training BERT in 76 minutes ICLR’20] 🔲 Scaling Vision Transformers

6. Machine learning Applied to Systems

Require Reading

🔲 [The Case for Learned Index Structures ICMD’18] 🔲 [Device Placement Optimization with Reinforcement Learning ICML’17] 🔲 [Neural Adaptive Video Streaming with Pensieve SIGCOMM’17]

7. Machine Learning Frameworks and Automatic Differentiation

Require Reading

🔲 Automatic differentiation in ML: Where we are and where we should be going 🔲 TensorFlow: A System for Large-Scale Machine Learning

8. Efficient Machine Learning

Require Reading

🔲 Linear Mode Connectivity and the Lottery Ticket Hypothesis 🔲 Integer-only Quantization of Neural Networks for Efficient Integer-Arithmetic-Only Inference 🔲 FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search

Additional Optional Reading

🔲 Hessian Aware trace-Weighted Quantization of Neural Networks 🔲 The state of sparsity in deep neural networks 🔲 Sparsity in Deep Learning: Pruning and growth for efficient inference and training in neural networks

9. Fundamentals of Machine Learning in the Cloud, the Modern Data Stack

Require Reading

🔲 The Sky Above The Clouds 🔲 FrugalML: How to Use ML Prediction APIs More Accurately and Cheaply 🔲 Pollux: Co-adaptive Cluster Scheduling for Goodput-Optimized Deep Learning

Additional Optional Reading

🔲 RubberBand: cloud-based hyperparameter tuning

10. Benchmarking Machine Learning Workloads

Require Reading

🔲 MLPerf Training Benchmark 🔲 MLPerf Inference Benchmark 🔲 Benchmark Analysis of Representative Deep Neural Network Architectures

11. Machine learning and Security

Require Reading

🔲 Communication-Efficient Learning of Deep Networks from Decentralized Data 🔲 Privacy Accounting and Quality Control in the Sage Differentially Private ML Platform 🔲 Robust Physical-World Attacks on Deep Learning Models

Additional Optional Reading

🔲 Helen: Maliciously Secure Coopetitive Learning for Linear Models 🔲 Faster CryptoNets: Leveraging Sparsity for Real-World Encrypted Inference 🔲 Rendered Insecure: GPU Side Channel Attacks are Practical 🔲 The Algorithmic Foundations of Differential Privacy 🔲 Federated Learning: Collaborative Machine Learning without Centralized Training Data 🔲 Federated Learning at Google … A comic strip? 🔲 SecureML: A System for Scalable Privacy-Preserving Machine Learning